For me, the word cyberspace has a dubious etymology. It is a shortened form of the word cybernetics, concatenated with the word space. The word cybernetics is derived from a Greek word which, depending on its context, can mean rudder, steersman, pilot or governor. The word space refers to a multi-dimensional medium through which conscious entities like humans may become aware of each other and interact. By consequence, the word cyberspace must refer to a multi-dimensional medium which some conscious entities use as a rudder or steering device through which to govern or control the rest of us. For me, this is pretty close to the truth.

Consequently, the multi-dimensional medium of cyberspace must include, first and foremost, terraspace — the physical biosphere of our planet. After all, our primary means of communicating is by travelling to meet and converse with others face-to-face. The biosphere is filled with air, which conducts the sound of the human voice. It is thus a medium through which human beings may converse. This medium is an ideal channel for human communication. Through it, normal human speech offers a channel of communication with a very high bandwidth over a conveniently limited distance. This facilitates the rapid interchange of knowledge, thoughts and ideas with safe and easy control between privacy on the one hand and publicity on the other.

Cyberspace must also include radiospace — what we know as the electromagnetic spectrum, comprising the full range of radio waves, through which we are able to communicate at a distance almost as if face-to-face. And finally, of course, it includes the Internet. Personally, I question whether or not these relatively recent technical advancements really offer any advantage to the well-being of humanity. My reluctant perception is that humanity certainly has yet to gain the necessary and sufficient wisdom to use them in an appropriate and constructive way rather than as a tool for conditioning, tranquillizing and exploiting gullible populations.

The following discourse is about all three of these aspects of cyberspace and what goes on within them, as perceived by me from where I stand within time, space and the social order.

Who Owns Terraspace?

Perhaps in the misty beginnings of humanity, people wandered unhindered across the bountiful surface of their native planet, able freely to communicate and interact with their peers. But it was not long before kings began to annex the Earth's habitable land, and the resources it contains, as a means to govern and control the majority of mankind and thereby command his labour. This was done firstly by force of arms then by legal instruments such as the "Inclosure Acts" (1604 to 1914) in the United Kingdom.

Perhaps in the misty beginnings of humanity, people wandered unhindered across the bountiful surface of their native planet, able freely to communicate and interact with their peers. But it was not long before kings began to annex the Earth's habitable land, and the resources it contains, as a means to govern and control the majority of mankind and thereby command his labour. This was done firstly by force of arms then by legal instruments such as the "Inclosure Acts" (1604 to 1914) in the United Kingdom.

Nowadays, the only way the individual may move across the surface of our planet is via a transport infrastructure, which is far from free, and within which, the individual's movements are severely restricted, regimented and controlled. The Earth is no longer free to wander.

Today, individuals may communicate and interact across the physical surface of the planet, but only by the leave of its State and corporate owners. And these require payment for their leave. They also impose the rules as to which restrictive routes the individual may use and how and when and under what conditions he may use them. They control all land. Control is ownership. Even in the prosperous First World economies, the individual has no allodial claim over any part of Planet Earth. He even has to pay for the privilege of "owning" his little brick box parked on its postage stamp lot in suburbia.

In my opinion, this is not an idyllic state for the human species. Each individual is an independent sentient being. Collectively, humanity is not a conscious entity. Neither is a family, a State or a corporation. These have no personal fear or feeling. Consequently it is the well-being of each conscious individual that matters. Each individual should therefore rightly have free and unencumbered economic use of his fair share of terraspace, and the right of free passage through all terraspace on the understanding that he does not disturb its economic use by its allotted user. No collective has the moral power to take these rights from the individual.

Who Owns Radiospace?

In 1867, James Clark Maxwell formed his mathematical prediction of the existence of radio waves. Two years later, Heinrich Hertz managed to generate them artificially. Subsequent experiments showed that these waves could be made to bounce between the ground and the ionospheric layers high above and thereby propagate around the world. Before these events, nothing was known about these waves, or about the dimensions of space-time across which they travelled. And what is unknown can be commandeered by neither king nor corporation. Consequently, upon its discovery, the radio spectrum was an untouched virgin land, wild, unclaimed, free. Anybody could build his own apparatus and experiment to his heart's content.

But not for long. As with the open continents of the New World, kings and emperors, enterprises and corporations, soon looked upon the radio spectrum with possessive eyes. Each mustered his mighty power to divide and conquer it for lucrative gain. The kings of the Earth each soon declared his allodial possession, within his respective jurisdiction, of the newly revealed dimensions of space-time through which travelled the electromagnetic wave. Common man could no longer venture therein, except by the leave of his king. Thus, like land, the radio spectrum had become subjected to what could be viewed as another form of "Inclosure Act".

For king or State, radiospace is invaluable for administration, defence and as a source of common revenue. Thus, today, the king (as an embodiment of the State) reserves some of the radio spectrum for use by his military and civil services. He licenses the use of what remains to his subjects, in return for a fee. He licenses some of it to broadcasters, some to the merchant marine, some to aircraft operators, some to commercial communications carriers, some to private communication, and even some to amateur radio enthusiasts.

As always when a king grants his leave, it comes with terms and conditions. In the case of licence to use the radio spectrum, it comes with very restrictive terms and conditions. The licence holder is restricted not only as to what frequency range and power he may use, but also to what kind of information he may send and receive.

The restrictions placed upon radio amateurs are simply Draconian. Political, religious and commercial comments or discussions are specifically prohibited. It is probably unsafe even to discuss philosophy. In fact, the only topics that radio amateurs are safely able to discuss are their equipment, the weather and signal conditions. This makes for tediously boring conversation. Furthermore, however boring it may be, a chat between radio amateurs can never be private. Section 2, Clause 11(2)b of the "Terms, conditions and limitations" of the UK's Amateur Radio Licence states that "Messages sent from the station shall not be encrypted for the purposes of rendering the Messages unintelligible to other radio spectrum users."

The restrictions placed upon radio amateurs are simply Draconian. Political, religious and commercial comments or discussions are specifically prohibited. It is probably unsafe even to discuss philosophy. In fact, the only topics that radio amateurs are safely able to discuss are their equipment, the weather and signal conditions. This makes for tediously boring conversation. Furthermore, however boring it may be, a chat between radio amateurs can never be private. Section 2, Clause 11(2)b of the "Terms, conditions and limitations" of the UK's Amateur Radio Licence states that "Messages sent from the station shall not be encrypted for the purposes of rendering the Messages unintelligible to other radio spectrum users."

Radiospace is thus strictly off-limits to any lowly individual who may wish to discuss — either privately or openly — his own philosophical, political, religious or commercial ideas and opinions with his fellow human beings. I sometimes vainly try to imagine what it would be like to be a member of a group of fictitious egalitarians who alone knew of the existence of radio waves and how to use them to communicate. We would have the unencumbered freedom, without cost, to discuss whatever we liked, unhindered by any restrictions and regulations imposed by king or State. Even if my friends and I had discovered a secret technique, such as chipped digital spread-spectrum transmission, way back in the 1950s, we could have communicated by radio among ourselves without any authority of the time even being aware of our signals.

No State wants individuals to have the unlicensed and uncensored freedom to be able to discuss any and all topics with any other arbitrary individual within its realm. If they could, like minds may connect. Connected they would become united. United they could not be divided. Undivided they could not be ruled. And that would pose a real and present threat to the established order.

Unlike terraspace, radiospace is three-dimensional and is not confined to the surface of the Earth. It occupies the entire universe. So, to what extent can a sovereign state lay claim to it? It cannot be fenced like land. A State cannot stop radio waves from crossing its national frontiers. The only extent to which a State can lay claim to the possession of radiospace is to control its use by its subjects. The only way it can do this is by imposing a legal penalty upon any individual who uses radiospace, from within its territorial boundaries, without licence or in a manner or for a purpose that its law forbids. The State polices its radiospace, to see who may be violating this aspect of its "sovereign territory", simply by monitoring the radio spectrum from official listening stations.

Having imposed rigorous control over who may or may not transmit signals from within their territories, some sovereign states even forbid their subjects even from passively receiving radio signals without having paid a licence fee. This was abolished in the United Kingdom in 1971, but it still remains for receiving television programmes.

The licence fee money is purportedly used for creating programmes and running the transmission services. Notwithstanding, it places the State's broadcasting authority in the position of receiving unconditional finance to produce what the authority wants the people to see and hear, which is not necessarily what the people want to see or hear. More disturbingly, it creates a facility by which the State and its broadcasting authority may steer and manipulate the public mind, making it an effective means of controlling society to conform to the will of the State and the elite minority who actually influences and controls it.

A sovereign state cannot stop radio waves from other sovereign states from entering its territory. Neither can it stop radio waves emanating from within its jurisdiction from leaving and entering the territories of other sovereign states. A particular State may wish to prevent foreigners listening to its domestic broadcasts. It can try to do this by limiting power and/or using line-of-sight frequency bands. More likely, though, it may wish to prevent its subjects from receiving broadcasts from certain other foreign States, for conflicting political reasons. This it can achieve by transmitting jamming signals on the same frequency as the offending broadcast, as happened during the Cold War. Nowadays, with digital transmission, complete control of who may or may not receive a broadcast can be effected by encrypting the signal.

So, having discovered it, how should mankind use radiospace? The answer, in my opinion, is in a way that is fair to every individual. Every individual, should he wish to, must be free to use radiospace to seek and find like minds and discuss openly and freely his ideas with them. He should also be free to broadcast his ideas to any who may wish to listen. He must also be free to contact anybody casually or for some specific reason. Equally, he must have the right to privacy from being pestered by persistent callers, such as with the present unwelcome epidemic from telesales call centres.

Technology exists whereby all this could be easily implemented without common infrastructure. However, for it to work equitably, there must be some form of central coordination. A single entity must exist to allocate spectrum usage and session channels and to maintain search indexes. But such an entity must not be an active authority. It must have no coercive power. Neither should it ever be allowed to fall into the hands of private commercial interests. It must be a passive system, implemented as a distributed technology within the equipments of all its users.

Who Owns The Internet?

Radiospace is an aspect of the natural universe. The Internet is not. Two radio amateurs, in different parts of the world, may communicate with each other over HF radio, using transceivers designed, built and owned entirely by themselves. In this case, their two transceivers are connected by entirely natural means. Two geeks, in different parts of the world, may communicate with each other over the Internet, using personal computers designed, built and owned entirely by themselves. In this case, however, what connects their two personal computers is a vastly complicated and expensive artificial infrastructure.

The justification for the extreme cost of the Internet's infrastructure is that radiospace simply does not have the capacity for the data transfer speeds demanded by all the Internet's users. At least, not the way the Internet is currently implemented. And the way it is implemented has a lot to do with how the Internet was born, developed and evolved.

One part, of what became the Internet, started from the wish of scientists and academics in research centres and universities around the world to be able to rapidly exchange scientific papers and experimental data. They opted for the quick solution of renting the use of cables or packet services from national telecommunications authorities and corporations. Another part started as networks linking government centres in various countries, again using rented cables. A third part started as separate private international data communications networks, also operated across rented cables, by various large multinationals. These three parts and their various elements all finally, by mutual agreement, became interconnected to form the Interconnected Networks or Internet.

Consequently, the connecting cables are owned by national telecommunications authorities and licensed private telecommunications corporations, each within its designated geographical jurisdiction, throughout the world. These may also own the switching nodes (or routers), at the various junctions in the cable routes. Switching (or routing) equipment may, however, also be owned by private corporations who rent the use of the cables connecting their private switching nodes. All these together form what is called the Internet Backbone, which spans the entire globe.

A broad-brush logical view of the global backbone is shown on the left. The width of each coloured line depicts the relative amount of data traffic flowing between each pair of the 5 main regions of the world. By far the busiest route is between the USA and Europe. Practically 100% of Latin America's traffic, bound for the rest of the world, flows via the USA. The one glaring omission is a route linking Latin America with Africa. With practically all the world's data traffic flowing via the USA, the USA is effectively able to intercept, monitor, record, interrupt or stop all data flowing around the world.

A broad-brush logical view of the global backbone is shown on the left. The width of each coloured line depicts the relative amount of data traffic flowing between each pair of the 5 main regions of the world. By far the busiest route is between the USA and Europe. Practically 100% of Latin America's traffic, bound for the rest of the world, flows via the USA. The one glaring omission is a route linking Latin America with Africa. With practically all the world's data traffic flowing via the USA, the USA is effectively able to intercept, monitor, record, interrupt or stop all data flowing around the world.

All private interests operating within the USA, including all owners and operators of Internet cables and routers, through which practically all the world's Internet traffic passes, are subject to US law. Consequently, the government of the United States of America — through its various agencies — is able to control the flow of traffic within the physical infrastructure of the Internet worldwide.

An Internet Router sits at each junction of the Internet. Its job is to forward each arriving data packet, down the next appropriate leg of its route, to its final destination. Each data packet contains information about its origin and destination addresses. Most routers are located in US territory and are therefore under the jurisdiction of US law.

The US government is therefore in a position to be able to issue a legal instrument to a router administrator forbidding the forwarding of data packets travelling between a specified [home or foreign] origin and a specified [home or foreign] destination. With appropriate software or chip firmware covertly embedded within a router computer, a US government agency could effect the blocking remotely, without the router administrator even being aware of it.

I am not suggesting that they do this. But the general adage is that: if they can, they will. They may be thus able, at will, to determine whether or not a web site in one foreign country be visible or not in a different foreign country.

So forget trade sanctions. The greatest and most devastating form of sanction the USA could impose on practically any country in the world is the IP packet sanction: the refusal to route packets originating from [or destined to] a target country across US territory. The Prism Program diagram above shows that, for by far the most part, the victim would have no other route.

At the time of writing, I don't think the USA is the kind of country to do this. But one must bear in mind that, as a pure consequence of human nature, any country could in the future fall victim to a fascist regime with policies of exclusive national self-interest embodying Draconian attitudes of arrogant disrespect and overt bullying towards the rest of the world.

Notwithstanding, effective ownership of the Internet's physical infrastructure does not procure control over what kind of information it carries, and between who and whom. The Internet is internationally open. Hence, the semantic content of the data traffic flowing through it is outside the direct control of any one sovereign state or legal jurisdiction. To truly control the Internet, it is necessary to construct some means of controlling what kind of semantic content may pass through the Internet and who may and who may not access it.

Because of its central position, within the worldwide infrastructure of the Internet, the USA has, through active selective encouragement, managed to establish multiple means of achieving total control of Internet content — or, at least, world public access to it. These means are, for all practical purposes, exclusively concentrated within United States jurisdiction, where, again, they can be regulated by US law. The government of the United States has thereby effected a worldwide "Inclosure Act" upon cyberspace.

Control of The World-Wide Web

A scientist or academic — or indeed, anybody who may want to — can publish his observations, experiences, sufferings and opinions on the worldwide web, as in the example of my own web site shown on the right. All he needs to do is write his thoughts in a text file, mark it up in HTML [the hypertext mark-up language] and upload it to a web server. He can include, within his text, diagrams, photographs, animations and even running programs called applets. But how do others get to see what he has thus published?

A scientist or academic — or indeed, anybody who may want to — can publish his observations, experiences, sufferings and opinions on the worldwide web, as in the example of my own web site shown on the right. All he needs to do is write his thoughts in a text file, mark it up in HTML [the hypertext mark-up language] and upload it to a web server. He can include, within his text, diagrams, photographs, animations and even running programs called applets. But how do others get to see what he has thus published?

Within the hypertext mark-up language is a container for a list of keywords. It is called the keywords meta tag. The author of the document places relevant keywords into the keywords meta tag of his document. These are words, which he thinks are likely to spring to people's minds, when they are searching for material on the subjects or ideas that his article covers. The words which actually spring into people's minds, when they are thinking of a particular subject or idea, are not necessarily very likely to appear in the actual texts of the most relevant documents. So choosing effective keywords is quite an art.

Certain computers, connected to the Internet, accommodate running programs called search spiders. These continually trawl through all the documents on all the servers on the worldwide web. Each spider looks in the keywords meta tag of each document. It then places references to that document against all the relevant keywords in its vast search index.

Running on these certain computers also is another kind of program called a search engine. This is accessed via a web page, which looks something like as shown on the left.

Running on these certain computers also is another kind of program called a search engine. This is accessed via a web page, which looks something like as shown on the left.

A person looking for documents on a particular subject types the relevant keywords that come to mind into the search engine's entry field and then clicks "Go". The search engine then looks in its vast index for relevant documents. It then displays a list of the titles of the relevant documents it has found, together with a short summary under each title. The searcher then clicks on a title which interests him in order to display that document in his browser.

Search engines and their spiders were free ancillary services running within large computers operated by academic institutions and other non-commercial establishments. Documents were listed strictly according to relevance and nothing else. Everybody could find exactly what they wanted within all that was available. And they lived happily ever after. That is, until business and commerce got their dirty devious hands on the worldwide web and corrupted the whole process.

The natural motive of academics and other thinkers is to make their material available to people who are genuinely interested in the subjects concerned. They have no desire whatsoever to push their material down the throats of people who are not interested in it. Not so the businessman. He wants to attract anybody and everybody to his web site in order to beguile them, by all means possible, into buying his products. And he quickly found an effective way of doing this.

He compiles a list of the most popular search words that people enter into search engines. These are, for the most part, words with sexual, sportive or financial connotations. Then he places these words — along with keywords that are relevant to his own business — into the keywords meta tag of his web site's home page. So not only do people specifically seeking his products end up at his site, but also a vast number of other people who were searching for something quite different. His hope is that these other people, having arrived at his site, will be beguiled by his eye-candy presentation into buying his merchandise.

The overloading of the HTML keywords meta tag with false attractors eventually became such an overwhelming nuisance to Internet users that traditional search engines became almost useless for serious searching. Something drastic had to be done. The solution was to abandoned the keywords meta tag as the means for search spiders to classify web pages. Search engines eventually ignored the contents of keyword meta tags altogether and instead extracted keywords directly from the main body text of the document.

Of course, this practice does not lend itself to the compilation of the most effective keywords. Most words people think of when looking for a document are unlikely to appear as such within the document's text. Keywords are generally big highly specific words. The thought such a keyword embodies is usually expressed much more powerfully within the actual text by a phrase comprising a succinct combination of much smaller words. Thus the process has already lost some of its original effectiveness. But this is only the beginning of woes.

To compensate for this problem of less effective keyword harvesting, web page authors began to write text that was deliberately force-populated with what they considered to be relevant keywords. This naturally resulted in bland text that was much less interesting and expressive, and indeed much more irksome and tiring to read. So it had to be kept short. The quality of web content thus began to diminish and so viewer interest had to be evermore held by quirky artwork. Web content thus became increasingly trivial.

At this juncture, by powerful marketing, US search engines began to attract the greatest number of web users. European and other search engines quickly fell from popularity and eventually disappeared. But the major players among the US search engines began to think commercially. Before, they had been ancillary services provided internally by academic institutions and large corporations.

But now they wanted to make a profit. Charging the individual searcher was essentially impractical. So they adopted a policy for charging commercial web site owners. One popular way was to charge for ranking sites within search lists. The more you paid to the search engine provider, the nearer the top of the relevant search list your site would appear. This meant that non-commercial web sites all but disappeared from all search lists.

In all this confusion, I found that a small search engine called Google still returned good relevant results. I think that perhaps at the time it did not charge for ranking. I don't know. All I know is the results I got were good. Finally, for whatever reason or by whatever means, Google grew and became the de facto means by which practically all the web users in the world searched for sites and pages on the worldwide web. Thus Google has become the single exclusive access point, for everybody in the world, to information within the worldwide web.

This means that Google alone can determine how the entire worldwide web is indexed from the point of view of almost all the web users in the world. Its indexing policy can determine whose web sites can be seen and whose cannot; whose appears in search lists and whose does not. It can use arbitrary criteria to rank web pages by relevance, and indeed to exclude vast swathes of websites from its index altogether. Thus, one single commercial entity, within the jurisdiction of one single sovereign state, has almost total control over the flow of intellectual material among all the inhabitants of Planet Earth.

This means that Google alone can determine how the entire worldwide web is indexed from the point of view of almost all the web users in the world. Its indexing policy can determine whose web sites can be seen and whose cannot; whose appears in search lists and whose does not. It can use arbitrary criteria to rank web pages by relevance, and indeed to exclude vast swathes of websites from its index altogether. Thus, one single commercial entity, within the jurisdiction of one single sovereign state, has almost total control over the flow of intellectual material among all the inhabitants of Planet Earth.

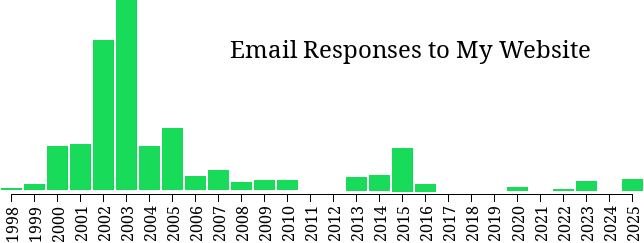

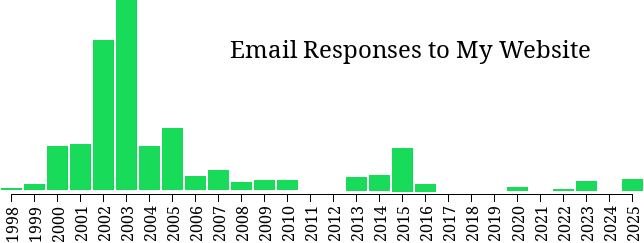

My web site has been on-line since April 1998. That's before Google came to power. Up until around 2004, my site had thousands of unique visitors a month. I received hundreds of emails in feedback from viewers. Now, looking in the access log, I see that this entire vast web site, of articles amounting to about 1·3 million words, receives about half a dozen meaningful hits a month if I'm lucky. And these hits are exclusively to pages about odd technical topics, which are of only ancillary significance. Searching for major topics within this website using any public search engine reveals nothing. Yet I have complied, as best I can, with all the technical standards that search engines currently demand, with the exception of degrading my text to suit search engines. Why should this be? Statistically, it makes no sense.

Over the 28 years my website has been 'up', its size and quality have grown continuously. The emails from its readers indicate enthusiastic and profound interest in its content. Notwithstanding, as can be seen from the above chart, there was a rapid growth in responses up to the end of 2003 followed by a catastrophic fall in 2004 with a small reverberation in 2005. Beyond a second much smaller echo in 2007, the responses deteriorated to nothing in 2011 with just a slight resurgence in 2015.

Furthermore, the quality and intellectual depth of the responses up to 2005 were high and pertained to long detailed discourses on profound subjects; whereas the responses after 2007 generally pertained to short ancillary pages about technical 'asides', which had little or no value as regards intellectual interaction.

It is abundantly self-evident that some external mechanism has been shaping this response profile and that it hasn't been due to any changes in the site's content.

The Spider That's Too "Clever"

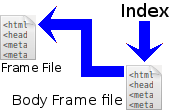

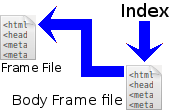

I speculate that one reason for the dramatic fall in hits to my website is Google trying to make its search spider a bit too "clever" for the good of writers like myself. What is known as the three-frame set has been a standard way of presenting documents of the kind that I write since Internet antiquity.

Each of my documents is presented, within the browser window, in three distinct areas called frames. The top frame, shown in pink, is the Heading Frame. It contains the document's title plus a 4-line extended heading or mission statement. To the left is the Navigation Frame shown in cyan (light blue). This contains hyperlinks to the group of documents of which this document is a part. The remaining frame, shown in yellow, is the Body Frame, which contains the main text.

Each of my documents is presented, within the browser window, in three distinct areas called frames. The top frame, shown in pink, is the Heading Frame. It contains the document's title plus a 4-line extended heading or mission statement. To the left is the Navigation Frame shown in cyan (light blue). This contains hyperlinks to the group of documents of which this document is a part. The remaining frame, shown in yellow, is the Body Frame, which contains the main text.

The content of each of the three frames is contained in a separate file: a Heading Frame file containing the title and extended heading of the document, a Body Frame file which contains its text and a Navigation Frame file containing the navigation links. There is a fourth file (called the Frame File). This acts as an organiser to load the contents of each of the other three files into its appropriate area of the browser window. The document is displayed by simply requesting that the Frame File be displayed. The Frame File itself does the rest.

The content of each of the three frames is contained in a separate file: a Heading Frame file containing the title and extended heading of the document, a Body Frame file which contains its text and a Navigation Frame file containing the navigation links. There is a fourth file (called the Frame File). This acts as an organiser to load the contents of each of the other three files into its appropriate area of the browser window. The document is displayed by simply requesting that the Frame File be displayed. The Frame File itself does the rest.

The browser window should be ideally 924 pixels wide: 210 for the Navigation Frame and 714 for the Heading and Body frames. The window height should ideally be about the same or a little more. With these minimal dimensions, the Heading and Navigation frames will not scroll. Only the Body Frame should contain a vertical scroll bar. This arrangement allows the reader to scroll down the Body text while the title and mission statement stay permanently in view at the top as a semantic anchor for the reader's mind while reading a long discourse. The Navigation Frame stays put also, keeping the reader always aware of where the document he is currently reading fits into the big picture.

This arrangement is ideal for presenting large documents on intellectual subjects. It is not, however, what they call "Google-friendly". Google seems to be completely flummoxed by frames. Initially, as I understood it, the Google spider could not read JavaScript. I therefore adopted the following strategy to induce Google to index my documents, which exploited the Google bot's inability to see JavaScript.

I needed to induce the Google bot to trawl the Body Frame file of my document for keywords. I therefore pointed all links that referred to the document concerned to the frameset's Body Frame file. In the Navigation Frame file, the Heading Frame file and the Frame File I put an HTML meta tag to tell search spiders not to index them but to follow the links within them onwards to other files. I put a different meta tag in the Body Frame file telling the spiders to both index the document and follow the links within it onwards to other documents.

I needed to induce the Google bot to trawl the Body Frame file of my document for keywords. I therefore pointed all links that referred to the document concerned to the frameset's Body Frame file. In the Navigation Frame file, the Heading Frame file and the Frame File I put an HTML meta tag to tell search spiders not to index them but to follow the links within them onwards to other files. I put a different meta tag in the Body Frame file telling the spiders to both index the document and follow the links within it onwards to other documents.

What would appear in Google's index would therefore be references to each of my Body Frame files. If a searcher clicked on a link in a Google search results list, my Body Frame file alone would be displayed — without a heading, mission statement or contents list. That would look very frumpy and would not be much use. I therefore included, in the header section of my Body Frame file, the following JavaScript statement:

This tells the browser that if it is being asked to load my Body Frame file as the only document to be displayed in the browser window, then it must load the document's corresponding Frame File instead. The Frame File then presents the three frames in their respective areas of the browser window. And all is well.

That is, until Google decided to make its bot a bit too "clever". It seems they made it able to read JavaScript. The problem is that, although the Google bot may be able to determine what a JavaScript statement is doing, it does not know why it is doing it. And in its ignorance, it assumes the worst. If it could not read the JavaScript, it would blindly continue on through the Body Frame file and extract keywords. But it does not do this.

When it encounters the above JavaScript statement at the beginning of my Body Frame file, it sees that it is immediately being redirected to another page. It assumes that because I am immediately redirecting it to another page, I must be doing something "sneaky" (Google's terminology). What is sneaky about presenting my document in a standard frameset, I can't imagine. Nonetheless, the Google bot, because it wrongly interprets what I am doing, refuses to index my document. Hence, from thousands of hits a month from interested viewers, my pages began to receive practically none.

When it encounters the above JavaScript statement at the beginning of my Body Frame file, it sees that it is immediately being redirected to another page. It assumes that because I am immediately redirecting it to another page, I must be doing something "sneaky" (Google's terminology). What is sneaky about presenting my document in a standard frameset, I can't imagine. Nonetheless, the Google bot, because it wrongly interprets what I am doing, refuses to index my document. Hence, from thousands of hits a month from interested viewers, my pages began to receive practically none.

If the spider had just stuck to ignoring JavaScript, all would have been well. If it had simply obeyed the JavaScript, executed the Frame File to assemble the frameset as a complete document the way a browser does and then indexed it, then again, all would have been well. But only half-doing the job, the way it does, has caused nothing but trouble for web writers like me.

My only way out of this was to create a parallel web site in which all my 1·3 million words of articles were re-formatted as single boring documents with no global site navigation. So I had one website for my readers and another for Google to index. What a pain. Hopefully, when a Google searcher finds one of my articles in Boring Format, he will click on a link somewhere within it, which will take him to the properly presented version of my website. This, however, proved not to be an effective solution. So eventually, I had to resort to using non-web networks as a means of providing "back-door" indexing for my website.

My Change to CSS

Once the CSS (Cascaded Style Sheet) system for formatting web pages reached a reasonable maturity, I decided in mid-2018 to re-structure my entire web site using CSS coding to provide the same effect from a single HTML file that Frames had provided using 3 + 1 separate files. This got around Google's hobby-horse of having one addressable file per document. I then uploaded the new version of my web site to the server, together with an appropriately updated sitemap file, which I then re-pulled via my Google webmasters account. I thought this would solve the problem of not being indexed. How wrong I was!

Six months later, I was receiving only between zero and four valid Google referrals per month, with a little over four times that number falling as '404s' (page not found). This showed that after 9 months, Google had not updated the indexing of my web site to reflect the new structure as specified in the sitemap.xml file. Thank goodness for the indirect Kademlia hits. In all, therefore, changing to a CSS basis was far worse than the old Frames-based site.

On examining my Google webmaster data, I saw that my site had been penalised for two reasons:

- my web pages were not "mobile-friendly", and

- my web pages took "too long" to load.

Presumably, "not mobile friendly" signifies that it is not suitable for looking at on a cell phone or such like.

The content of my website is, for the most part, intellectual essays of between five thousand and twenty-five thousand words, which are copiously illustrated with diagrams, photographs, animations and illustrative Java applets. Of course, the Java applets can no longer run the preferred way as in-text programs. Instead they must be launched as separate Java Web Start applications.

Addendum April 2019: Now, due to the superlative paranoia of the Oracle Java security system, even Web Start applications can no longer be launched. Happily, they can still be run using the Open-Source version of Java with the IcedTea plugin. But from the point of view of web site illustration the Oracle version of Java is now dead.

My question is: who would be stupid enough to expect to be able to read a 25,000 word illustrated intellectual essay on a cell phone? Nobody, I would think. So why penalise a web site full of such essays because its documents are not "mobile-friendly"? I think the old adage "horses for courses" comes in here somewhere.

Taking "too long to load" depends on how long is too long. And too long for what purpose? I can only deduce that Google considers the essays on my web site to be too long to put on the Worldwide Web. Consequently, if I wish such content to be indexed, I must break it up into a staccato of small pieces capable of fitting within the miniscule attention-span of most web viewers. In other words, contrary to what Google preaches, I must write my content specifically to suit the preferences and limitations of the search engine: not to suit the author-determined best presentation of the subject matter.

I must therefore conclude that, contrary to popular belief, Google is not a general search engine but a popular search engine geared to finding only the quippy little pages of commercial web sites.

Notwithstanding the above exasperating annoyance, the dominant reason for web sites such as mine disappearing from search listings is that all the major search engines have followed suit and changed their criteria for indexing websites. Any little website promoting a commercial entity, right down to a corner shop, gets pride of place in search listings. For anything of an intellectual nature, however, only globally known high profile web sites seem to get listed. Others appear to be classified as being of interest only to their owners and are consequently not considered worth including on a search list. Of course, another possibility could be that the programmers of search-engine algorithms have suddenly become extremely inept. I do not, however, think this to be likely.

From what I understand, from what I am able to glean from the web, the main criterion now for ranking a web page is the number of links there are to it from other web pages multiplied by the "importance" rankings of the web pages containing those links. This policy systemically guarantees that the high ranking of web sites that are already established is preserved and that nothing new will ever see the light of day. And this is exactly what appears to happen.

From what I understand, from what I am able to glean from the web, the main criterion now for ranking a web page is the number of links there are to it from other web pages multiplied by the "importance" rankings of the web pages containing those links. This policy systemically guarantees that the high ranking of web sites that are already established is preserved and that nothing new will ever see the light of day. And this is exactly what appears to happen.

I'm led to believe that this ranking policy came from the notion that the relative importance of a published academic paper is proportional to the number of other academic papers that cite it. Let's test this idea. Suppose I am a scientist. I publish a new academic paper. It is on a very important topic within a little known field on the very leading edge of scientific research.

Question: how do the authors of later scientific papers on related topics get to know about my paper in order to cite it?

I publish my new paper on the Worldwide Web. It has no importance ranking whatsoever because nobody has [yet] cited it. So Google won't index it. So nobody will be able to find it using Google. So nobody will know of its existence. So nobody will cite it. So its ranking will remain forever zero. It will be forever considered unimportant. Hence it will remain unindexed and therefore uncited. Thus, irrespective of how profound and potentially world-changing the revelations in my paper may be in reality, they will remain forever hidden from the world.

I publish my new paper on the Worldwide Web. It has no importance ranking whatsoever because nobody has [yet] cited it. So Google won't index it. So nobody will be able to find it using Google. So nobody will know of its existence. So nobody will cite it. So its ranking will remain forever zero. It will be forever considered unimportant. Hence it will remain unindexed and therefore uncited. Thus, irrespective of how profound and potentially world-changing the revelations in my paper may be in reality, they will remain forever hidden from the world.

But who has decided that my paper is irrelevant? Is it the scientific community? Is it the sharp experienced minds of thinking people? No, because they have never seen it. So they could never make any judgement about it. This is because they were unable to become aware of it because they couldn't know of its existence. And all because a search robot with a flawed algorithm 'pre-decided' that it was irrelevant and so locked it into permanent limbo.

Obviously, a new academic paper does get cited. So it must become known to members of the academic community beyond its author. The process by which it becomes known must therefore follow an algorithm that is very different from that used by the Googlebot. It involves many channels of much greater bandwidth than a web search engine. It involves encounters at discourses and seminars, casual conversations in the corridors, publication in academic institute journals, press and television interviews and a host of other educational and socio-economic channels. These are the only ways through which anything new can become known. All the Google algorithm can do is maintain the status quo.

But what of those of us who do not have — and have never had — any kind of academic or institutional affiliations? How do we share our new thoughts with the world. Certainly the answer seems to be: not through the Worldwide Web.

I painstakingly research and write a new document on what must be, at least to some people in the world, an interesting topic. I upload it to my web site. I link it into my site index and arrange for appropriate pages already on my site to link to it where relevant. My new document has no links pointing to it from external web sites. It therefore does not have any search ranking whatsoever. It will therefore never appear in any search listings. Consequently, nobody will ever see it. Consequently, nobody important will ever link to it from their important site. It will remain forever excluded. So those "at least some people in the world" will never be able to find it, no matter how well tuned a set of keywords they may enter into the search engine. Quod erat demonstrandum.

Notwithstanding the above, suppose I already know somebody who is very interested in the topic upon which I have written. I give him the URL of my new document so that he can access it directly without needing to use a search engine. He reads it. He likes it. He tells other people about it. But why should he put a link to it from another web site? He can simply bookmark it in his browser. So even if people get to know about my new document by means that are outside of the worldwide web, it is still not very likely to be linked-to from any "important" sites.

Thus, for the purpose of revealing to everybody the goldmine of information that is available on the worldwide web, this indexing policy is systemically dysfunctional. It simply preserves the popularity of what has already been made popular through other means. But perhaps this is deliberate. Perhaps the intention behind the change in the search algorithm around 2004 was for the occult purpose of creating, strengthening and preserving a worldwide web establishment, while minimalizing or excluding everybody else. Before 2004, I could search the web and find a vast diversity of interesting stuff. Now my searches end up at the appropriate established mega-site that shows me only the bland sanitized content that I am meant to see.

The worldwide web has thus become subjected to a passive or inductive form of censorship, effected by the fact that a search engine places references to large established sites at the top of any search results list, pushing other "also ran" sites way down the list, where only the very diligent searcher will bother to look, leaving most not listed at all. But whose fault is this? Is it the fault of Google? Partly. But, in my opinion it is mainly the fault of the vast majority of people who allow a private monopoly like Google to become the only route of access to the vast wealth of information on the Worldwide Web. It is the fault of the lazy and apathetic mentality of common man who, through his unconditional awe and worship of corporate power, always blindly clings to the most promoted option.

An interesting corollary of Google's dysfunctional search algorithm is its converse: namely an algorithm that specifically indexes sites that contain new ideas born of lateral thinking outside of the established mainstream. It is the inverse of Google's search algorithm: simply ranking a web page according to how few referrals it has from other websites.

Of course, there are many other arbitrary criteria used for determining whether or not a web site should be penalised in search listings. One such is the imposition that there must exist an <h1>/</h1> tag-pair in each document (presumably at the beginning) and that the content between these heading tags must be consistent with the content between the document's <title> and </title> tags. For the most part, this makes the <title> tag redundant. So why use it at all?

The default <h1> tag causes a heading to be displayed in enormous print. Non-commercial and non-journalistic web sites rarely use the <h1> size heading. Hence, in order to accommodate this Googlean imposition, web authors are required to redefine the <h1> tag to be a sensible size using a CSS class; an enormous chore for a web site with hundreds of HTML documents, which achieves nothing for the content of the document. It is another clear case of having to write the document for the search engine; not the user.

The content of the <title> tag serves a different purpose from that of the <h1> tag. It could, in some cases, be identical syntactically. In this case the imposition would be met and the document not penalised. In many more cases, however, in my experience, the title content may well contain the <h1> content. Notwithstanding, under good writing style, whereas the title tags may contain content that is semantically consistent with that of the <h1> heading, it is unlikely to be syntactically so. Since search engines can only look at syntax, they cannot make anywhere near the same range and degree of semantic association as can the human mind. Consequently, in such a case, the document will be unjustly penalised.

At a certain time [I think it was around 2018-19] Google 'found' that more than half of all search enquiries came from mobile phones [or, at least, from mobile devices of some sort]. This apparently precipitated another ridiculous imposition that, consequently, all web sites should be what Google termed mobile-friendly. The reason or logical dependency between the propositions: 'more than half of all search enquiries came from mobile devices' and 'all web sites must be mobile friendly' alludes me, although I can see how impulsive thought could arrive at such a conclusion.

Is Google saying that web sites whose documents — such as this one — cannot be comfortably read on a mobile device shouldn't exist or are of little or no interest to anybody? Who in their right mind would want to read a highly illustrated intellectual article of the order of 20,000 words on a mobile phone? But they may well want to read it on a PC monitor or printed out on A4 paper. Again, the term "horses for courses" springs to mind.

The Cease and Desist Order

But there is yet another form of censorship, which the US government — or any other US-based authority, agency or interested party — can impose on web sites. That is a legal instrument called the Cease and Desist order.

Google, which is based in the US at the confluence of all major routes within the worldwide Internet infrastructure, is exclusively subject to, and regulated by, US law. If the powers that be [whoever they are] do not like something that has been written in a web page, they can issue a Cease and Desist order. Not to the purported offender [i.e. the author of the page], but to the search engine operator.

The demand of the Cease and Desist order is not to take down the web page concerned or to delete the "offending" content from it, but to cease and desist from placing the page in a search index. Thus it will never feature in any search results. This way, the location of the site's web server, the nationality of the author of the web page and the particular sovereign jurisdiction within which he may currently reside are all irrelevant. The search engine is located in the US and is therefore bound by US law, so the execution of the Cease and Desist order is easy and straightforward.

I once saw a document that was titled as a Cease and Desist order within a search list of my web site. Strangely, it seemed to be nothing more than a template. The reference to the offending web page and the offending content within it were both blank. It had the name and address of what I presume was an American law firm. Apart from this, it seemed to be a complete non-entity. Shortly afterwards it disappeared. I have never been any the wiser. I presume that somebody somewhere got their legal knickers in a twist. On the other hand, the inexplicably low hit count of my website (between zero and 4 valid referrals per month) could be explained by the presence of some legal order of this kind forbidding the indexing of my site. Perhaps it is something I said.

The upshot of all this is that general public access to content on the worldwide web appears to be inductively steered, by a single political interest, towards approved parts only of the total content that exists. And the content of those approved parts seems to be becoming more and more trivial. I am neither for or against the United States of America. I would have the same gripe were any other sovereign power in the same singular position. Nonetheless, a situation exists in which one sovereign power alone is able to exert almost total control over something which pertains to the entire world. And it is my firm opinion that any such situation is fundamentally against the best interests of mankind.

Graded Access Speed

Finally, on top of all the above impositions, there is a further inductive form of censoring that may soon be imposed by the major Internet Service Providers. They propose to provide higher data transfer speeds for the websites of those who are able to pay higher fees. These will effectively promote the dominant commercial websites backed by large corporations, burying websites — no matter how high the quality of their content — of individuals and smaller groups who simply cannot afford these higher fees.

But this turn of events should be of no surprise to anybody. After all, it is simply capitalism in action. Once the Internet opened itself to commerce, its old egalitarian vision of universal freedom to exchange information was destined never to last. It had eventually to enter the real world in which the interests of the malleable many are outweighed by the greed of the favoured few.

Restrictive Browsers

The search algorithm is not the only means by which the American Internet corporate clique is applying passive censorship to accessible web content. Mainstream web browsers are also being used to steer users towards what seem to be their preferred types of website. An example is the Microsoft Edge web browser, which, on my Windows 10 laptop, is set to be the default browser that, consequently, most people will tend to use. When I attempted to access this [http] website with Microsoft Edge, a statement appeared telling me that this site could not be reached securely. Said statement is, of course, a lie. There is absolutely nothing insecure about accessing my [this] website via an http connection. Microsoft Edge then refuses to open the site. Its condescending statement then continues by offering to open the site in the older Internet Explorer browser presumably for whoever really wants to take the "risk" of accessing my website.

I imagine that all this would deter most people from accessing my site, even though there is no factual danger whatsoever — to their personal privacy, their computer or anything else — in their accessing it.

The default web search facility for the Microsoft Edge browser is Microsoft's own search engine called 'Bing'. I registered my site with Bing. During the registration process, what I read conveyed to me that although Bing notes the existence of all registered websites, it only indexes https sites. Consequently, anybody conducting a web search with Microsoft Edge will be presented only with https sites in search results.

Thus with Microsoft Edge — the default browser in Windows 10 — refusing to display non-https sites, plus Bing's indexing of https sites only, looks to me as yet another corporate instrument of exclusion aimed against all non-mainstream producers of web content. I have since changed to https access, just to appease such browsers. It offers no added security whatsoever regarding my particular web site. It merely costs more.

Fortunately, the Mozilla Firefox browser handles the situation factually and pragmatically. It accesses and displays all websites [http and https] plain and simply. It only presents a warning message [shown on right] when the keyboard has focus within a text field that POSTs text to the site's server. If you click on the 'Learn More' link, you are led to a web-based 'help' page that gives a clear and factual explanation of the very limited situation in which you may be vulnerable. Of course, at the time of writing, this warning message never appears anywhere on my site when accessed by Mozilla Firefox.

Fortunately, the Mozilla Firefox browser handles the situation factually and pragmatically. It accesses and displays all websites [http and https] plain and simply. It only presents a warning message [shown on right] when the keyboard has focus within a text field that POSTs text to the site's server. If you click on the 'Learn More' link, you are led to a web-based 'help' page that gives a clear and factual explanation of the very limited situation in which you may be vulnerable. Of course, at the time of writing, this warning message never appears anywhere on my site when accessed by Mozilla Firefox.

The whole problem of web search exclusion, as well as coming from Google's dysfunctional search algorithm, is enormously exacerbated by its practice of extracting search keywords from content text while ignoring the web author's own keywords specified in each page's HTML keywords meta tag. This practice, in turn, came from the propensity of some web authors to pad the HTML keyword meta tags of their web pages with 'false attractors' — keywords that have no relevance to the page content but which many people will tend to use in searches.

Notwithstanding, the only type of website that has any motive to pad its keyword meta tags with false attractors is a commercial site. The only type of website into which the browser user needs to enter sensitive personal data is a commercial one. A commercial site is thus interactive: it involves two-way communication between the browser user and the website. The website displays information about its wares and prices; the browser user returns his name and payment information [such as credit card details] to the website's server. So, of course, to be secure it needs to use the https [secure hypertext transfer protocol]. And, of course, search engines need to use text-based keyword extraction in order to circumvent the inevitable malingering shenanigans of commercial interests. This is clearly a job for the likes of Google and Bing.

On the other hand, what I call an intellectual website is passive. Not all intellectual websites are 'academic' or mainstream sources. They include also informative, disseminative, interest, personal and other non-interactive websites of any size or authorship. There is no need — there is nothing gained — for them or their viewers by using and hence incurring the extra cost of https. And of course, the authors of purely intellectual sites have no motive to pad their keyword meta tags with false attractors. They want to attract only those viewers who are interested in what they have to say. So passive websites can be served safely via http and indexed according to the keywords that appear in each page's meta tag.

Consequently, there is need for a world-class universally known search engine to index http sites the old-fashioned way from their keyword meta tags.

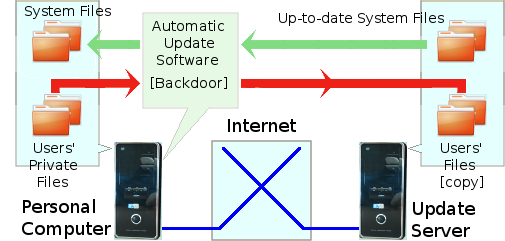

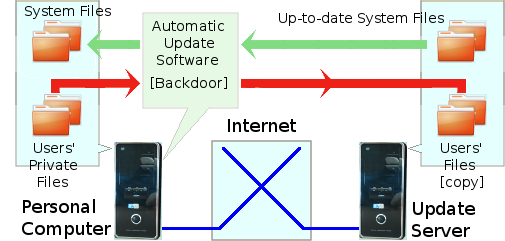

Originally, each computer connected to the Internet had a Post Office server running all the time. People from all over the world could send an email at any time to the server. The server displayed an alert on the screen of the appropriate recipient when an email arrived for him. He then accessed it and read it. Thus the email system of the Internet was distributed and, by consequence, was reasonably private.

Running an email server requires the recipient's computer to be permanently on-line. This was fine in the day when the clients of the Internet were large Unix machines. Soon, however, small personal computers became the norm for the majority of Internet users. It is not normal, nor is it desirable, to keep these switched on all the time, so they do not lend themselves to running mail servers. The solution is to have a distribution of large computers permanently on-line running email servers. Each personal computer then runs an email client program, which can access its local email server from time to time to download any new mail. This is still a reasonably well distributed system.

Distributed systems are anathema to authorities. This is because they are difficult to monitor and control. The solution is to lure people to large web-based email sites. These provide an attractive user interface, which is served through a web browser. A user of a small personal computer logs on to his account at a web-based email site to send and receive his email. The US email site providers dress up their user interfaces with lots of attractive artwork and advertise their "free" services. Whereupon, the vast avalanche of new Internet users flock to them from all over the world, like moths to a candle flame.

Email had originally been a channel for serious communication. With the advent of the web-based email giants, this abruptly ended. An ethos of total trivia quickly established itself. Now, people who have no real interest in email adopt a habit of forwarding endless jokes and stupidity to all and sundry. The result is that people's mailboxes become choked each day with truckloads of utter rubbish. Each has to spend ages getting rid of all this unwanted material and seeking out the few proper emails buried within it. This phenomenon is greatly exacerbated by the endless avalanches of unwanted and utterly abhorred junk advertising.

Many people cannot keep up with the necessary daily purging of junk from their email in-boxes. Vast numbers of email boxes therefore become abandoned as giant pus-balls of junk festering within these megalithic webmail servers. The abandoners then have no alternative but to establish a new email address. And the cycle repeats. What a stupid waste of resources!

Against this stressful backdrop is the perilous situation in which a statutory communication, such as a payment demand, can legally be sent by email and that its having been sent means that the recipient is deemed to have received it. Consequently, if the recipient accidentally deletes it along with the hundreds of junk emails among which it is buried, or if the email becomes misrouted, or if his Internet connection is down, or if his computer fails, then he is penalized. To my mind, this situation is an impractical absurdity, apart from being thoroughly unjust.

Unlike with the PC-based email client, the user of web-based email does not download his email into his own computer. Instead, it is left in his email account on the giant server of the web-based email service provider. Here, it is stored in supposed privacy. This privacy, however, is private so long as US law allows it to be so — either in general, or as may pertain to a specific user at a particular time. So, no matter what your nationality or country of abode, if an agency of the US government issues a legal instrument to the US-based webmail service provider, to reveal to it your email archives, then it shall be done.

It is well evinced, by the nature of advertisement applets at the edges of my browser window, that the web-based email service provider trawls the content of my emails for clues as to what he is most likely to be able to sell to me. Notwithstanding, it is but a small step from here to trawling the content of my emails to see what political opinions or allegiances I hold and whether these be in or out of line with US government interests.

I find the monitoring and surveillance of the meta-data (such as the sender, recipient, subject and date) of an email disturbing. In the United Kingdom, so I am led to believe, the actual content of an email is also monitored. I find this even more disturbing. Monitoring, however, is a passive activity. It does not actively interfere with or disrupt the transit of emails.

Active intervention, on the other hand, definitely does interfere with and disrupt the transit of email. I used to write frequently to somebody on the other side of the Atlantic. Recently, however, Microsoft decided to block my emails from its network. Since my correspondent has a Hotmail account, my emails can no longer be received by him, although I can still receive the emails he sends to me. I also used to correspond with another person across the Atlantic. The subject matter we discussed necessitated that we exchange files attached to our emails. My correspondent has a Google mail account. We soon discovered that Google blocks the exchange of attachments of the kind we needed to exchange. This is direct and unauthorized interference with personal mail. I believe it would be classed as a crime if it were perpetrated by an individual. It would appear, however, that corporations are above the law.

Notwithstanding, the original email facilities of the Internet are still alive and well. Furthermore, I think the time is nearing when each home will be equipped with a domestic local area network. This will be connected to the Internet probably by a small, low-consumption server, which operates continuously. This server can then run the old-fashioned post office mail server, which can receive and send the home's emails, independently of any megalithic web-based service. This, however, will only work provided the Internet service providers (ISPs) leave open the listening ports required by post office mail servers. Disturbingly, there has been an increasing trend, over the past decade, for ISPs to close these listening ports.

Social networking can be done most simply and effectively through open websites and email. This, however, is outside corporate or state control or influence. And this will not do. The powers that be — commercial or political — therefore set their goal to induce the vast majority of Internet users to interact exclusively through a small clique of dominant social-networking sites.

I remember the early social networks as being quite useful. In the early days (circa 2003), I could search MSN for — and find — other people in the world who shared my values, interests and aspirations. But not any more. The dominant social networking sites today such as Facebook stubbornly inhibit any attempt I may make to link with people of similar values, interests and aspirations. I quickly found that entering my interests on my Facebook page was not to help other people with those interests to find me. On the contrary, it was exclusively to help commercial advertisers target me with products based on my published interests. Understandably, I no longer have a Facebook presence.

Addendum 17 Sept 2025: In an attempt to research deeper into social media sites, I tried, on 22 April 2025 and several times thereafter, to open a Facebook account. I knew that I had closed the previous account I had 15 to 20 years ago. I filled in the required details, which the Facebook dialogue confirmed were all in order, and clicked the button to take me to my new account. Almost instantly the whole space filled with a message saying my account had been suspended due to misuse by my trying to create a duplicate account and posting inappropriate content. My first thought was that it had an ancient record of my closed account. So I tried Facebook's account search using my old email addresses. But every time, I got a reply saying "You’re Temporarily Blocked: It looks like you were misusing this feature by going too fast. You’ve been temporarily blocked from using it. If you think that this doesn't go against our Community Standards, let us know."

Pray tell me what does "going too fast" mean in this context? It makes no sense to me. No matter how many times I try this, I get the same result. So obviously I can't get in there to "let them know". It's what I've grown to expect from the Artificial Imbeciles through which we are forced to deal with the omnipotent brain-dead corporations that now dominate the Internet. And the public just accepts it.

At 13h17 on 01 October 2025 I received an email from Meta saying: "Your account has been permanently disabled" but still not a clue as to why. There was a link in the email to Meta's Community Standards, which I accessed and read extensively. I was still left without a clue. So, end of, I guess. Perhaps Facebook isn't the place to be anyway.

The only people with whom I was ever able to link up with on a modern social network were people I already knew and people whom they already knew. These only knew each other through family or casual connections. Of all the "friends" I accumulated via social networking, none shared any of my values, interests or aspirations. Their conversation was, to me, always trivial, inconsequential and supremely boring. For common man, the exchange of information via the Internet has thus become impotent, relegating any serious grass-roots thinker to a voice crying in the wilderness where none can hear.

The only people with whom I was ever able to link up with on a modern social network were people I already knew and people whom they already knew. These only knew each other through family or casual connections. Of all the "friends" I accumulated via social networking, none shared any of my values, interests or aspirations. Their conversation was, to me, always trivial, inconsequential and supremely boring. For common man, the exchange of information via the Internet has thus become impotent, relegating any serious grass-roots thinker to a voice crying in the wilderness where none can hear.

This has left television and other mass-media, once again, free to brainwash the public mind into forsaking its own well-being to serve the self-interest of the global elite. Once again, only those with lots of money — namely the state and the corporate — can make their voices heard.

This situation is not surprising. For thinking like-minded people throughout the world to be able to link up via the Internet, to exchange and develop ideas, is inherently dangerous for any global elite. So, through social networks, the Internet elite have managed to kill two birds with one stone. They have reduced to triviality practically all inter-personal communication between common people, while, at the same time, turning them into a captured audience for their highly-targeted commercial advertising.

Centralised Chat Sites

Almost from the beginning, a method of encoding the human voice into discrete Internet Protocol packets was commonly available through a facility called Internet Relay Chat (IRC). There are many free open-source IRC client programs, which can be downloaded and run on practically any computer — at least any running a version or derivative of Unix. These operate in what is termed peer-to-peer mode. An IRC conversation is conducted actively by only the computers belonging to those who are parties to the conversation. Other computers en-route between them are only ever passively involved.

This allows any two people, each located anywhere in the world, to hold a private conversation, without the data packets carrying that conversation passing through any central server. Neither the identities of the parties to the conversation, nor the content of what they say, can be intercepted, monitored, recorded, interrupted or blocked by any third party interest such as a corporation or government agency. And for the powers-that-be, this again, simply would not do.

Consequently, as with personal web sites and email, large US corporations soon sprang up and pro-actively attracted the exploding base of worldwide Internet users to establish free accounts on their servers. The declared motive of these corporations was, again, to turn all the people of the world into a captured audience for their highly-targeted commercial advertising.

On these commercially-based services, at least the initiation of the connection between the two parties to a conversation is done via a corporate server. Thus, the metadata — the identities and Internet addresses — of the parties to the call are known. Information regarding who conversed with whom and when, for subscribers all over the world, is thus available on the corporate server. While this is very useful for marketing products, it is perhaps even more useful for surveillance. And being based in the US, all these corporate servers are subject to US law and so can be forced at any time, by any agency of the US government, to hand over this information.

The operators of these Internet chat services assure us that our privacy is their priority and that we need not be concerned because all our conversations are protected by strong encryption. Notwithstanding, the session encryption key for each call isn't created by the parties to that call. It is created by the service provider's client software installed in each party's computer. There is therefore nothing to stop the service provider building in to his client program the capability of sending that key to his server if requested.

Furthermore, there is nothing to stop the service provider opting for the methodology whereby it is his server, rather than the client software, that issues the session encryption key for each call. In this case, any agency with access to the service provider's server can eves-drop on any conversation between any users worldwide. Hereby, the identities of the parties to a conversation, and the content of what they say, can now be intercepted, monitored, recorded, interrupted or blocked by any third party interest such as the service provider concerned or — by the appropriate legal instrument — any US government agency. And are we realistically to believe that if they can, they won't?

Furthermore, there is nothing to stop the service provider opting for the methodology whereby it is his server, rather than the client software, that issues the session encryption key for each call. In this case, any agency with access to the service provider's server can eves-drop on any conversation between any users worldwide. Hereby, the identities of the parties to a conversation, and the content of what they say, can now be intercepted, monitored, recorded, interrupted or blocked by any third party interest such as the service provider concerned or — by the appropriate legal instrument — any US government agency. And are we realistically to believe that if they can, they won't?

Cloud Storage Sites

A cloud, in this context, is a large mainframe computer — or even an array of such computers — which has a very large amount of data storage resources (such as disk drives) attached to it. This computer has very high bandwidth access to the Internet. Anyone may open an account on this computer via the cloud service provider's web site. This gives him an initial amount of free storage space. He may request more than this, but he will then have to pay so much a month for the extra space. To be able to use the cloud service to backup his data, he must download and install the cloud service provider's client software to run on his personal computer.

A cloud, in this context, is a large mainframe computer — or even an array of such computers — which has a very large amount of data storage resources (such as disk drives) attached to it. This computer has very high bandwidth access to the Internet. Anyone may open an account on this computer via the cloud service provider's web site. This gives him an initial amount of free storage space. He may request more than this, but he will then have to pay so much a month for the extra space. To be able to use the cloud service to backup his data, he must download and install the cloud service provider's client software to run on his personal computer.

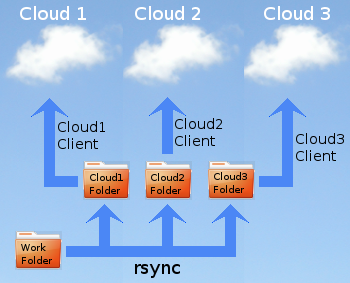

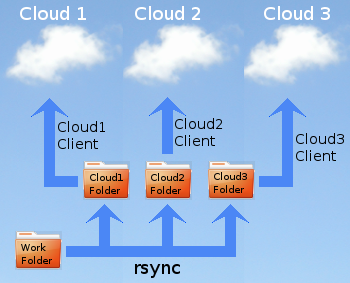

I think it was around 2008 that certain corporations started to offer limited amounts of cloud storage to people in general free of charge. Currently (early 2014) I subscribe to three cloud storage services on which I'm allowed between 2 and 5 gigabytes of storage for free. I use each to make an off-site safety backup of my work, which comprises my writing, software and research library. This all amounts to no more than 1·5 GB.