The classical Theory of Evolution postulates that life evolved from simple to complex. For this to happen, it is necessary for some mechanism to exist that can increase the information content of the living cell. It is self-evident that a single-celled life-form like an amoeba requires much less information to build and operate it than does a human being. If, therefore, the human being evolved from something as simple as an amoeba, the inherent programming within the cell that eventually gave rise to the human life-form must have increased drastically during its period of evolution.

As far as I can grasp it, modern evolutionary science asserts that yes, this can happen. The information content of a living cell can be arbitrarily increased by blind natural phenomena. Natural selection then allows the survival of only those few life-forms whose increase in cellular information results in constructive improvement. The majority, whose increase in cellular information makes them either less capable or completely dysfunctional, all die out almost immediately.

Hard evidence that the amount of constructive information within a living cell can be increased by blind natural phenomena does not come from any observation of the living cell. Nobody, as far as I am aware, has ever observed a cell gaining the information necessary to increase the complexity of the life-form of which it is a part. The evidence comes from physics. Specifically, it comes from the branches of physics called thermodynamics and information theory. The whole idea hinges around a concept called entropy and its relationship to the so-called information content.

Entropy and Information

Entropy has always seemed to me to be a negative concept. It increases with decreasing order. This is the way the universe generally moves. Classical examples of increasing entropy are dropping a glass on the floor so that it breaks. A broken glass never spontaneously reassembles itself seamlessly into a whole glass. Another is smoke coming from a fire. The particles of smoke always distribute into disorder. They never move together again and absorb the heat energy needed to recombine themselves again into the hydrocarbon fuel of the fire such as wood.

Notwithstanding, some will argue that entropy can, under certain circumstances, decrease within a bounded locality. An example is life. A life-form eats food. In so doing, it mashes the food into a disordered state. But then its internal organs use the disordered organic molecules in the food to build the ordered parts of the life-form's body. Order and organization within the life-form's body thereby increases. Entropy, by definition has thus decreased within the bounds of the body. This decrease in entropy, or increase in knowable (or available) information, was achieved at the expense of a far greater increase in entropy in the outside world.

The essential point that should be noted, however, is that this increase in order was expedited under program control. The procedure governing the process was built into the mechanism that achieved the decrease in entropy. This mechanism was itself constructed according to instructions contained within the life-form's cells. It was not achieved under the unaided expedience of the laws of physics.

There are other, more basic examples of entropy decreasing (order or information-content increasing) within bounded locales. Consequently, some suggest that, over billions of years in zillions of small steps, the information-content of the construction and operating procedures within a living cell could increase in the same way. But it can't. To explain why, it is necessary to make a clear distinction between what I shall call syntactic information and what I shall call semantic information.

Syntactic Information

The amount of syntactic information within a locale is a measure of the complexity of the structure within that locale. For example, the binary stream 01000001 contains 8 bits. Adding two more bits at the end increases its syntactic information content by 25%. However, without external interpretation, this piece of syntactic information carries no meaning. To make any sense, it must be written and read according to the same convention of interpretation. For example, 010000001 interpreted according to what is called the ASCII code, represents the letter "A".

But even the letter "A" itself does not really carry any meaning. It is merely one of many symbolic elements needed to form a word such as "ASSEMBLE". This now acts as a label for an action. Other words such as "MOLECULE" act as labels for objects. Nevertheless, groups of valid words do not necessarily convey meaning.

At the time of writing, there is an epidemic of scam emails, which generally seem to emanate from China. These emails contain text to try to fool email spam filters into accepting them as genuine emails. The text they carry comprises bunches of valid words that start with an initial capital letter and end with a full stop. But they do not form sentences that make any sense. So, although they contain a lot of syntactic information, they convey no meaning. Such text comprises lots of bits of information that are essentially unrelated to each other.

This is the kind of information that is the converse of entropy. Decrease in entropy is equivalent to an increase in syntactic information content. An assembly of connected atoms the size of a DNA molecule contains a vast amount of syntactic information. However, this does not necessarily mean that it carries a coherent body of meaning that can be interpreted as instructions and data for constructing and operating a complex biological life-form like a human being. It may simply be the molecular equivalent of a Chinese email.

Semantic Information

A molecule of human DNA contains more than syntactic information. Its syntactic symbols are organized globally so that the DNA molecule as a whole represents a coherent body of meaning that relates to something else. It represents the structural data and procedural instructions for constructing and operating a human life-form within its Gaian environment.

This unified body of meaning is what I call the DNA molecule's semantic information content. This is nothing to do with entropy or the laws of thermodynamics. The quantity of semantic information within a DNA molecule cannot be determined by the laws of physics.

An example of semantic information is the sentiments conveyed in a poem. These are inherent neither within the paper on which the poem is written nor within the ink with which the poem is written. Likewise, the semantic information content of a DNA molecule is neither inherent within the DNA molecule itself nor within the bases or atoms of which it is composed.

In order for the sentiments of a poem to be made manifest, they must be read and interpreted by an appreciative reader. Likewise, in order for a human life-form to be constructed and operated, the semantic meaning must be interpreted from the molecular syntax by some kind of separate and external biological interpreting mechanism. Thus, both the DNA molecule (the printed page) and the biological interpretation mechanism (the appreciative reader) must exist separately and concurrently. Furthermore, both must work with the same language. And language is a matter of convention. It is not a unique consequence of natural law.

So how can the semantic information content of a DNA molecule have got there? How can the amount of semantic information of a DNA molecule be increased in order to produce a more complex life-form? To deal with these questions, I will introduce a measure that I have decided to call semtropy. It is for semantic information what entropy is for syntactic information.

Semtropy and Semantic Information

For a life-form to grow more complex, each of the cells of which it is composed must undergo a decrease in semtropy. This is equivalent to an increase in the quantity of externally significant meaning a cell contains.

To illustrate the notion of externally significant meaning, I will use a snippet of Java code from a global navigation package that I wrote.

private double getGPSheading() { //USES ALL BEARINGS RELATIVE TO AIRCRAFT

if(toFlag) { //if approaching current waypoint

pw.DandB(); //compute bearing of previous waypoint

ReqHdg = 2 * rBrg - pw.getrBrg() - Pi;

} else { //else if receding from current waypoint

nw.DandB(); //compute bearing of next waypoint

ReqHdg = 2 * nw.getrBrg() - rBrg - Pi;

} //Steer twice reverse of difference between

return ReqHdg; // bearing of next waypoint and extended

} // bearing line from previous waypoint.

The above code constitutes a small Java "method" or self-contained procedure. This method is vital to the navigation package. It does a very small but absolutely essential job. Without it, the entire navigation system won't work. Understanding the code is irrelevant. It is sufficient to observe that it comprises lots of letters and other typographical characters.

Suppose a cosmic ray bombs the memory device containing the executable version of this little method. The ray destroys one of the bistables that holds the state of one of the bits (0 or 1). The syntactic information-content of the package is reduced by one bit. The byte of which it was a part becomes unreadable. The method no longer makes sense to the interpreter that is running it. The navigation system can no longer function. The air vehicle crashes.

Now suppose that the cosmic ray simply manages to reverse one of the bits in the memory module. In this case, the syntactic information content is unchanged. Nevertheless, the byte of which it was a part becomes changed. So again, the method no longer makes sense to the interpreter that is running it. The navigation system can no longer function. The air vehicle crashes.

Although, in this case, the syntactic information-content remains unchanged, the semantic information-content is reduced drastically. The method no longer functions as part of the navigation package. Even if, in the remotest possibility, the bombed bit changed to form another valid program instruction, that program instruction would be wrong. It would cause the method to function erroneously. So the air vehicle would undoubtedly still crash.

If I were to encrypt the text of my little Java method in order to transmit it securely across the Internet, it would be unreadable by humans, program compilers and interpreters alike. But its syntactic information-content would be unchanged. Nonetheless, its semantic information content would effectively become zero. That is, until somebody passed it through a compatible decryption process at the other end of the communication link.

In each case, the syntactic information content of the method became either unchanged or reduced. It never increased. Furthermore, its semantic information content became zero. The entire system became dysfunctional. Thus, an event that destroys or changes information randomly can never increase the coherent intelligence within a symbolic program.

Some events could possibly cause bases to be added to a DNA molecule. However, this would be like randomly adding a typographical character to the above Java method. It would increase the syntactic information-content of the method, and thereby, to the package as a whole. But it would have no relevance to the function of the method. It would probably result in the method becoming unintelligible to the compiler or interpreter of the program. Then, as before, the navigation package would become faulty and the aircraft would crash.

So it is vital to make the distinction between syntactic information-content (the "converse" of entropy) and semantic information content (the converse of semtropy). It is the difference between a random conglomeration of letters and an integrated work of literature. Arbitrary random processes acting under the blind forces of natural law cannot constructively increase or alter the semantic information content of a symbolic archive such as a computer software package or a DNA molecule. They can only corrupt it, reduce it or destroy it.

The Language Barrier

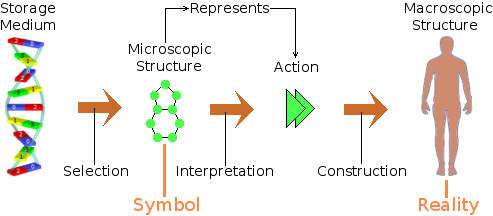

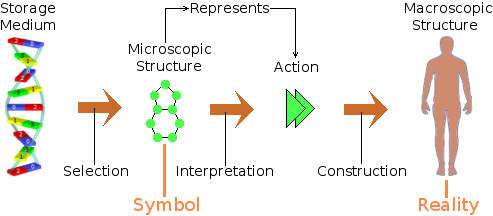

What essentially prohibits even a localized decrease in semtropy can be described as a language barrier. Consider the fundamental principles involved in the development of a human life-form from a single embryonic cell.

An intelligent selection mechanism within the cell has to select an appropriate extent of code from the cell's information storage medium. This comprises a set of microscopic structures such as chemical bases. But these are just the equivalent of black squiggly lines on white paper. They carry no inherent meaning. However, the way in which they are arranged in sequence does carry meaning. But only to an interpreting device (an appreciative reader) who can recognize them as being the symbols of a language.

Nevertheless, the symbols alone mean nothing. They may be just the equivalent of a Chinese email. The order in which the symbols appear must form a meaningful (semantic) message that is written in the same language that the interpreting device (appreciative reader) understands.

Syntactic information pertains only to the medium that carries it. It pertains to nothing beyond the medium that carries it. For example, a gas molecule "carries" information about its velocity and direction of motion at a particular time. A gas molecule carries information only about itself.

Semantic information, on the other hand, pertains to something beyond the medium that carries it. It carries information about something else. The base sequences of a DNA molecule within a living cell, for example, carry information that pertains to the macroscopic life-form of which the cell forms only a minute part. Furthermore, the DNA molecule is of a form and size that is totally unrelated to the form and size of the life-form to which it pertains.

The pivotal difference with semantic information is that it involves the concept of representation. It uses a symbol (e.g. a chemical base sequence) to represent an action pertaining to the process of constructing a life-form (e.g. a human being) that can be 15 orders of magnitude bigger and bear no physical or functional resemblance to it. And representation is a matter of convention. In other words, what sequence of chemical bases represents which of the actions that are involved in constructing and operating a human being is a matter of convention. It is not a consequence of the laws of physics.

The set of conventions that relate the molecular symbols to their respective actions in the construction and operation of the life form together form a kind of language. The notion of representation and the elements of language are nowhere apparent in the inorganic universe that is governed by physical law.

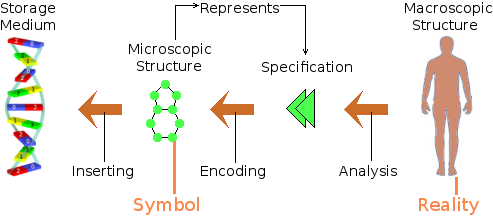

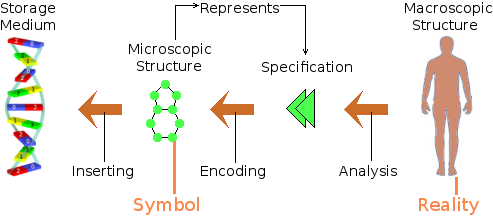

Consequently, to increase the meaningful information content of a living cell, in order to effect an increase in life-form complexity, the following must take place.

The life-form's Gaian environment must be observed and analysed. A judgement must be made as to what kind of additional structure and functionality would grant the best survival and development advantage to the life-form. This requires the services of a systems analyst who is capable of speculating about additions and alternatives to the status quo. This, in turn, requires the power of conscious abstract thought.

The desired additional structure and functionality must then be adjusted to integrate with the life-form as it currently exists and then formally specified. This requires the services of a systems engineer.

The additional structures and functions must then be encoded. They must be expressed in terms of the representative symbols and grammar defined by the conventions of the genetic code. This requires the services of a programmer with a foreknowledge of the genetic coding language.

The new stretch of code must then be inserted at the appropriate place in the storage medium to form a new seamless stream of semantic information. This requires the services of a systems integrator with complete knowledge of the original life-form's biosystem.

Judgement, abstraction, representation and the manipulation of semantic information are currently found nowhere outside the human mind. Consequently, if life did evolve from simple to complex, it is necessary for science yet to seek a mechanism that is capable of accomplishing it.

Parent Page |

© Written June-December 2009 by Robert John Morton